In the future, 2022 may be remembered as the year when generative artificial intelligence (AI) significantly impacted. Generative AI refers to a category of AI models capable of creating media content.These models primarily rely on user-generated text prompts to generate content, but they can also create media in other forms, such as images. For example, the user must input Prompts like "From a theoretical perspective of human agency, write a 1,000-word literature review of the psychological resilience literature".

Generative AI, dominated by LLMs and text-to-image models, is rapidly improving. Models for audio, video, and music may mature soon.Large Language Models (LLMs) such as OpenAI's GPT-3 and text-to-image models like Stable Diffusion have revolutionized the potential for generating data. By utilizing ChatGPT and Stable Diffusion, it is now possible to generate natural-sounding text content and photorealistic images at an unprecedented scale. These models have proven to be capable of producing high-quality text and images.

Main Components of Generative AI ArchitectureGenerative AI architecture refers to the overall structure and components of building and deploying generative AI models. While there can be variations based on specific use cases, a typical generative AI architecture consists of the following key components:

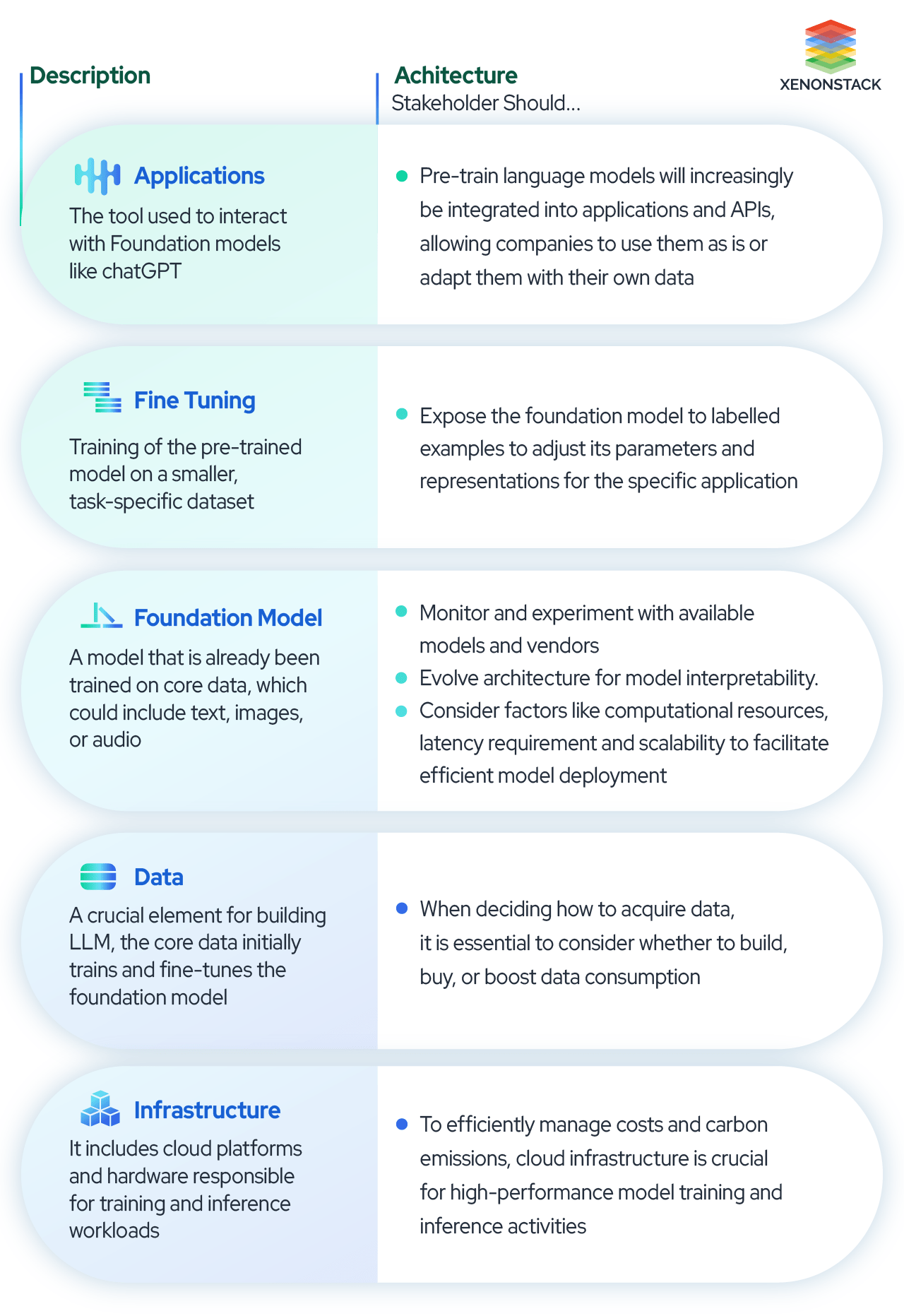

1. Data Processing LayerThis layer involves collecting, preparing, and processing data for the generative AI model. It includes data collection from various sources, data cleaning and normalization, and feature extraction.

2. Generative Model LayerThis layer generates new content or data using machine learning models. It involves model selection based on the use case, training the models using relevant data, and fine-tuning them to optimize performance.

3. Feedback and Improvement LayerThis layer focuses on continuously improving the generative model's accuracy and efficiency. It involves collecting user feedback, analyzing generated data, and using insights to drive improvements in the model.

4. Deployment and Integration LayerThis layer integrates and deploys the generative model into the final product or system. It includes setting up a production infrastructure, integrating the model with application systems, and monitoring its performance.

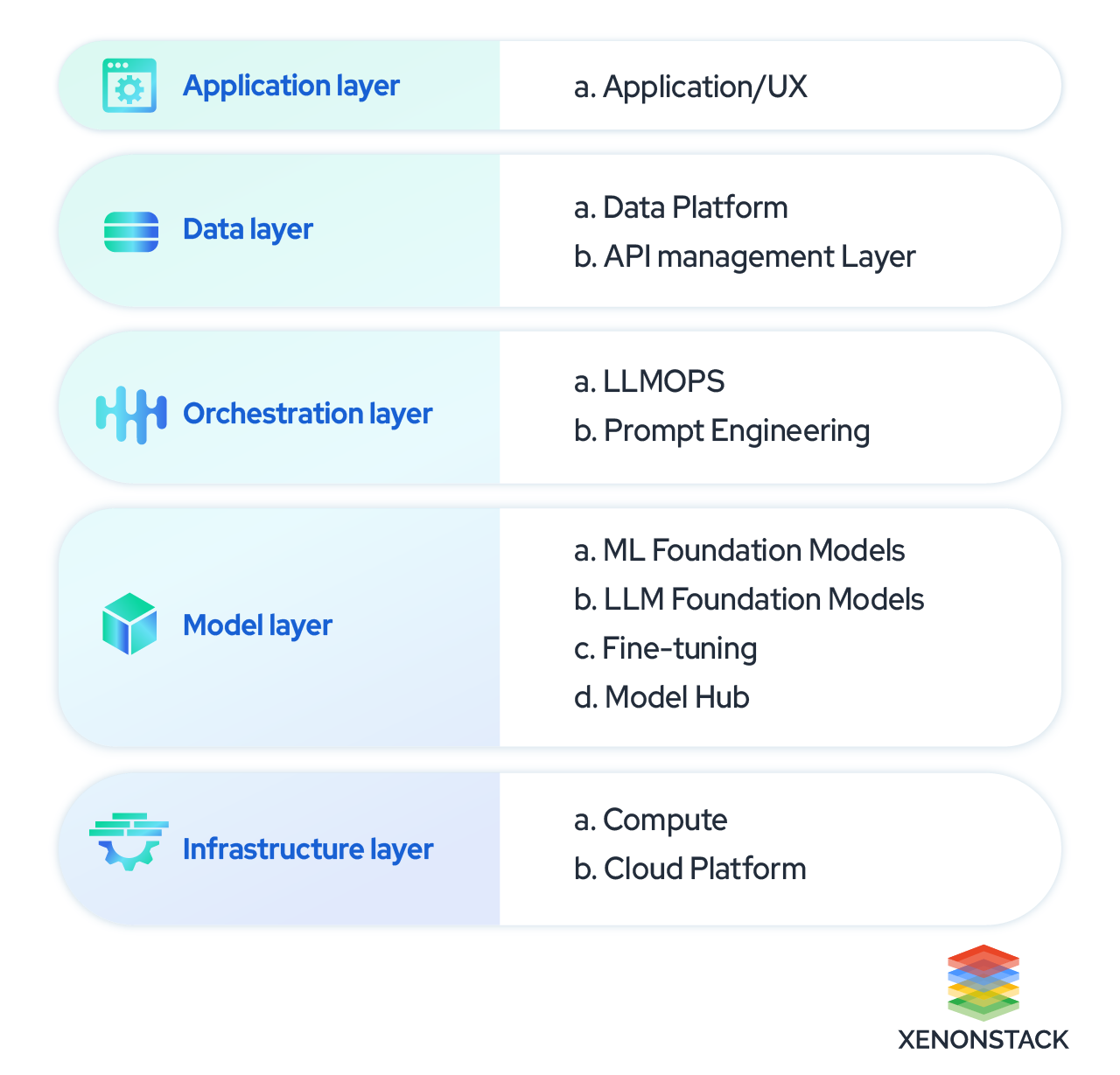

Layers of Generative AI ArchitectureThe architecture of a generative AI system typically consists of multiple layers, each responsible for specific functions. While there may be variations based on specific use cases, a typical generative AI architecture includes the following key layers:

1. Application layerThe application layer in the generative AI tech stack enables humans and machines to collaborate seamlessly, making AI models accessible and easy to use. It can be classified into end-to-end apps using proprietary models and apps without proprietary models. End-to-end apps use proprietary generative AI models developed by companies with domain-specific expertise. Apps without proprietary models are built using open-source generative AI frameworks or libraries, enabling developers to build custom models for specific use cases. These tools democratize access to generative AI technology, fostering innovation and creativity.

2. Data platform and API management layerHigh-quality data is crucial to achieve better outcomes in Gen AI. However, getting the data to the proper state takes up 80% of the development time, including data ingestion, cleaning, quality checks, vectorization, and storage. While many organizations have a data strategy for structured data, an unstructured data strategy is necessary to align with the Gen AI strategy and unlock value from unstructured data.

3. Orchestration Layer - LLMOps and Prompt EngineeringLLMOps provides tooling, technologies, and practices for adapting and deploying models within end-user applications LLMOps include activities such as selecting a foundation model, adapting this model for your specific use case, evaluating the model, deploying it, and monitoring its performance. Adapting a foundation model is mainly done through prompt engineering or fine-tuning.

Fine-tuning adds to the complexity by requiring data labeling, model training, and deployment to production. In the LLMOps space, several tools have emerged, including point solutions for experimentation, deployment, monitoring, observability, prompt engineering, governance, and end-to-end LLMOps tools.

4. Model layer and HubThe model layer encompasses several models, including Machine Learning Foundation models, LLM Foundation models, fine-tuned models, and a model hub. Foundation models serve as the backbone of generative AI. These deep learning models are pre-trained to create specific types of content and can be adapted for various tasks. They require expertise in data preparation, model architecture selection, training, and tuning. Foundation models are trained on large datasets, both public and private. However, training these models is expensive; only a few tech giants and well-funded startups currently dominate the market. Model hubs are essential for businesses looking to build applications on top of foundation models. They provide a centralized location to access and store foundation and specialized models.

5. Infrastructure LayerThe infrastructure layer of generative AI models includes cloud platforms and hardware responsible for training and inference workloads. Traditional computer hardware cannot handle the massive amounts of data required to create content in generative AI systems. Large clusters of GPUs or TPUs with specialized accelerator chips are needed to process the data across billions of parameters in parallel. NVIDIA and Google dominate the chip design market, and TSMC produces almost all accelerator chips. Therefore, most businesses prefer to build, tune, and run large AI models in the cloud, where they can easily access computational power and manage their spending as needed. The major cloud providers have the most comprehensive platforms for running generative AI workloads and preferential access to hardware and chips.

Our primary focus has been on LLMs and text-to-image models so far. However, generative AI models can generate media beyond text and images, such as video and music.Generative models are a type of machine learning model that can produce new instances of data similar to those in a given dataset. They learn the underlying patterns and structures of the training data and use them to generate fresh samples with similar properties. In simpler terms, generative models can create new data that looks like the data it was trained on.

Generative models are used for image synthesis, text generation, and music composition. They can capture the features and complexity of the training data, enabling them to produce innovative and diverse outputs.

1. Large Language ModelsLarge Language Models (LLMs) are mathematical models used to represent patterns found in natural language use. They can generate text, answer questions, and hold conversations by making probabilistic inferences about the next word in a sentence. Essentially, they build human-sounding and contextually appropriate language word-by-word. It is important to note that despite the name "AI", LLMs are incapable of thinking and have no knowledge of the world beyond the word sequences in their training data. One way to describe LLMs is that they are good at predicting what might come next based on what has been said before.

These language models have a vast, multi-dimensional representation of how words have been used in context based on a vast dataset of training examples. For instance, OpenAI's GPT-3 has 12,288 dimensions and has been trained on a 499-billion-word dataset. These language models are foundational, general representations of real-world discourse patterns. They do not function well independently but provide a powerful starting point for models with a specific purpose.

For example, while GPT-3 does not perform well in conversations, human AI trainers, in tandem with a separate "reward" model, trained a chat-optimized version of GPT-3 known as ChatGPT. Microsoft has launched a revamped version of Bing search, which runs on a customized version of OpenAI's GPT-4; Google has released Bard, which uses its own LLM, PaLM 2; and Facebook has developed its model, LLaMA.

Get deeper insights from Large Language Models ( LLMs)

2. Text-to-image modelsText-to-image models, such as Mid Journey or DALL-E 2, create images using a simple yet effective technique. These models are trained on millions of labeled images, each represented numerically and projected into a latent space. The model then learns to slowly add noise to the images until they become entirely random. To achieve the desired outcome, the model employs a fascinating technique known as text prompting.

Starting with random pixels, it gradually eliminates noise until it perfectly aligns with the text or even incorporates an image provided by the user. This process can be truly mesmerizing as the model meticulously refines the generated content to meet specific requirements.Finally, the model upscales the generated image to better quality and outputs a synthetic image.

How do you build a generative AI model for image synthesis?

3. Fine-Tuning Large Language Models and Text-to-Image ModelsLLMs can comprehend and generate human-like text across various topics and tasks. They excel at language translation, summarization, question-answering, and more. However, their out-of-the-box performance might need to meet the specific requirements of a particular enterprise or industry.Just as a new team member needs on-the-job training to understand the complexities of their role within a specific company, LLMs require fine-tuning to adapt to a particular organization and domain-specific language.

Without this fine-tuning, LLMs might lack accuracy or produce outputs that do not align with the specific needs of a given enterprise. It is comparable to the new team member attempting tasks without understanding the company's specific processes or expectations.Adversaries who want to operate on a large scale and achieve high accuracy must train a model that accurately mimics the target population.

Fine-tuning a model involves training it to suit the specific operations of the adversary. Generative AI models are robust, making it relatively easy to fine-tune them. For instance, text-to-image models require only five to ten images to be fine-tuned for a specific person or class, while LLMs may only need 100 labeled examples per class or person. Although creating foundational models is expensive and time-consuming, it is easy to adapt them for specific downstream tasks once they are built.

Explore more about Universal Language Model Fine-Tuning (ULMfit)

4. VAEVAEs are generative models that learn the underlying probability distribution of a dataset and generate new samples using an encoder-decoder architecture. The architecture consists of an encoder network that maps input data to a lower-dimensional latent space and a decoder network that reconstructs the original data from the latent code. The VAE minimizes the reconstruction error between the input and reconstructed data.

5. GANGenerative Adversarial Networks (GANs) are deep learning models for various tasks, including generating realistic images. GANs consist of two neural networks, the generator and the discriminator, which work together in a process that can be broken down into the following steps:

Generative Adversarial Networks Applications and its Benefits

i. Generator networkThe main goal of the generator is to produce synthetic images that resemble those in the training dataset. It takes random noise as input and outputs an image. The generator can be considered a counterfeit artist trying to create convincing forgeries.

ii. Discriminator networkThe discriminator's task is to distinguish between authentic images from the training dataset and fake images created by the generator. The discriminator can be considered an art expert trying to identify forgeries.

iii. Training process

The generator and discriminator are trained simultaneously in a two-player minimax game. Here is how it works:

The generator creates a batch of fake images using random noise as input. A batch of real images is sampled from the training dataset.

The discriminator is trained to correctly classify the real images as real and the fake images as fake. This is typically done using binary cross-entropy loss.

The generator's parameters are updated to maximize the discriminator's error in classifying the generated images as fake. In other words, the generator is trained to "fool" the discriminator.

iv. Iterative process

Steps 1-4 are repeated for a predefined number of iterations or until a convergence criterion is met. As training progresses, the generator improves at producing realistic images, while the discriminator becomes better at identifying fake images. Ideally, the generator would eventually have images the discriminator cannot distinguish from real ones.

v. Generating new images

Once the training is complete, the generator can create unique, realistic images by providing random noise as an input.

6. AutoregressiveAuto-regressive models are statistical models used to predict future values based on past values. They are also used in generative AI to generate new data points that match the training data. These models assume that a variable's value at a given time step is a linear combination of its past values. Auto-regressive models are popular in various applications, such as natural language processing, image synthesis, and time series forecasting.

7. Stable DiffusionStable Diffusion is an AI model for creating AI images through the Forward Diffusion and Reverse Diffusion Processes. The Forward Diffusion Process adds noise to an image, while the Reverse Diffusion Process removes noise. This approach is used in deep learning models to generate high-quality images. The model learns to remove noise effectively by understanding the data distribution and structure, allowing it to generate new, high-quality images by reversing the forward diffusion process.

8. TransformersThe Transformer is a neural network that uses an encoder-decoder structure to generate an output. It does not rely on recurrence and convolution. Instead, it employs modules stacked on top of each other several times to process the input data. The encoder maps the input sequence to a series of continuous representations while the decoder generates an output sequence. The modules consist mainly of feed-forward and multi-head attention layers.

9. ChatbotThere are two main types of chatbots: retrieval-based and generative chatbots. Retrieval-based chatbots provide simple and direct responses to user inquiries, while generative chatbots aim to construct unique and contextually appropriate responses. Generative chatbots use various techniques to construct a response, such as tracking the ongoing conversation, utilizing the history of user exchanges, and matching appropriate responses based on a semantic understanding of the inquiry.

10. MultimodelsMultimodal models process multiple data types simultaneously, creating advanced outputs by integrating information from different modalities. For example, they can generate an image based on a text description. DALL-E 2 and OpenAI's GPT-4 are leading Generative AI models that generate text, graphics, audio, and video content. However, multimodal interactions are complex, posing challenges.

Explore more about Large Language Models ( LLMs)

AI is evolving rapidly, with Generative AI replacing traditional Machine Learning (ML). Traditional ML focuses on extracting meaningful features from data, training models, and tuning them for optimal performance. Generative AI emphasizes prompt engineering and uses foundational and fine-tuned language learning models (LLMs) for more sophisticated content generation.

AI Architecture for traditional ML includes ML frameworks, APIs, SDKs, databases, and ML Ops tools. For Generative AI, the tech stack includes Gen AI orchestration tools, LLM models, vector databases, and LLM Ops tools. There is also a growing emphasis on AI governance and dialogue interfaces to ensure AI's ethical and responsible use and to enable natural interactions with AI systems.

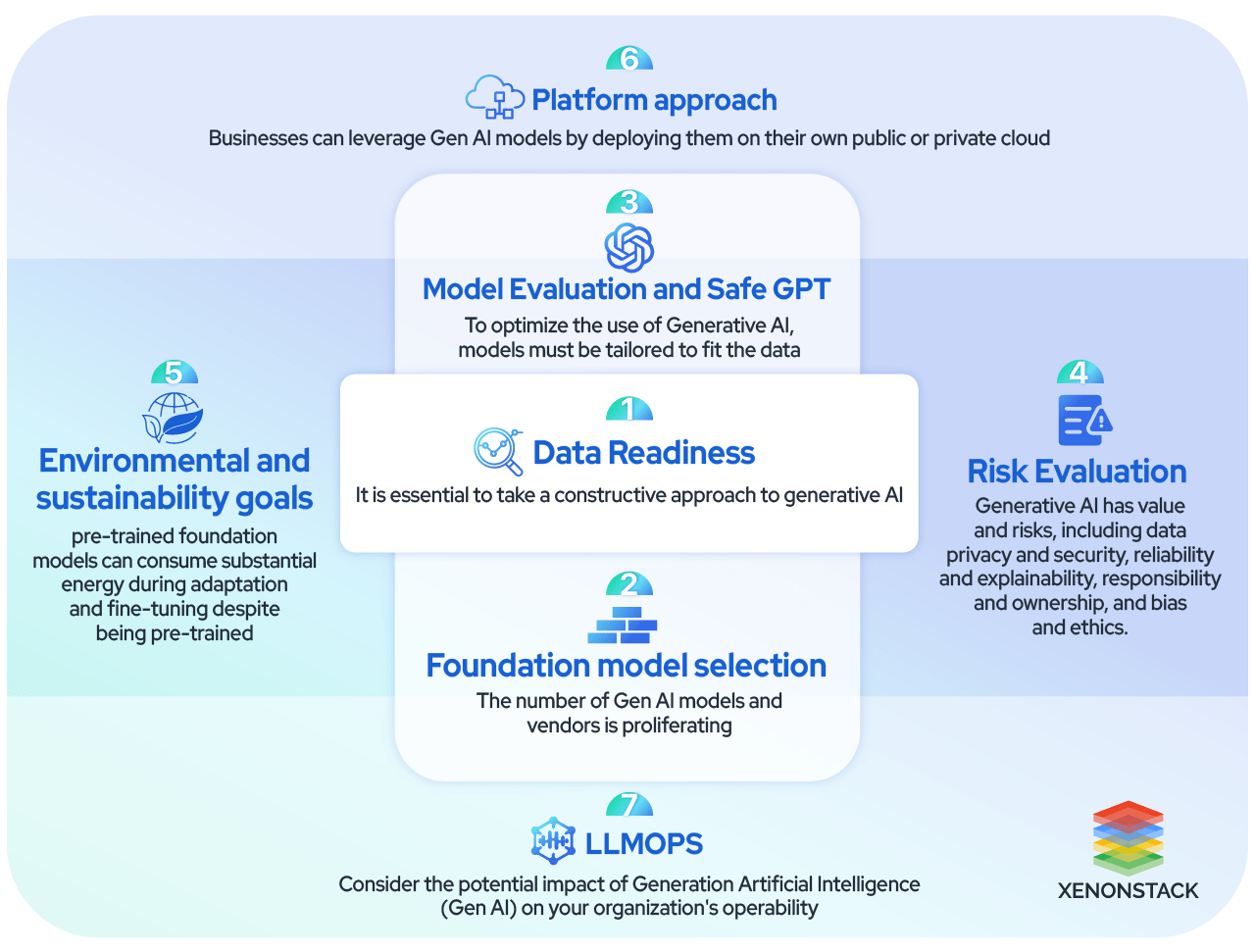

Architecture considerations for Enterprise-Ready generative AI SolutionsBy utilizing pre-trained foundation models, businesses can considerably decrease the number of AI models they need to create and maintain. This strategy allows fine-tuning a small set of pre-existing models rather than constantly creating new models for each use case. As a result, businesses can fundamentally transform their architectural approach to AI. Business leaders must ask seven key questions to prepare for the upcoming era of Gen AI.

It is essential to take a constructive approach to generative AI. Businesses can set themselves up for success by evaluating the team's readiness and workflows. To support generative AI, high-quality and usable data is essential. This will enable us to identify any gaps and areas for improvement. Data cleansing or enrichment can help if data needs to be prepared. This will help ensure the generative AI model delivers the desired outcomes and performs efficiently.

2. Foundation model selectionThe number of Gen AI models and vendors is proliferating. OpenAI and Cohere are some pure-play vendors offering next-generation models developed through fundamental research and trained on publicly available data. Matured open-source models are available via hubs like Hugging Face. Cloud hyperscale also partners with pure-plays, adopt open-source models, and provide full-stack services. Pre-trained models on specialized domain knowledge are becoming increasingly accessible. Smaller and lower-cost foundation models like Databricks’ Dolly make building or customizing Gen AI more accessible. However, careful consideration must ensure that the option fits the organization’s needs and requirements.

3. Model Evaluation and Safe GPTModels must be tailored to fit the data to optimize the use of Generative AI. This can be done by acquiring pre-trained models, incorporating proprietary data, or building new models. However, modern data infrastructure is necessary to fully realize Generative AI's benefits. When integrating GPT models, organizations must prioritize security, reliability, and responsibility and consider integration and interoperability frameworks. Companies must also evaluate Responsible AI implications and develop mitigation techniques to ensure AI models meet essential requirements and do not compromise enterprise security.

4. Risk EvaluationGenerative AI has value and risks, including data privacy and security, reliability and explainability, responsibility and ownership, and bias and ethics. These risks can manifest in various ways, such as regulatory fallout from undisclosed data collection and retention, errors in generated content due to deficiencies in the training data, legal ownership issues, and discriminatory content due to biased training data. Enterprises need to be aware of these risks and work with legal teams to manage IP and evolve ESG goals while being vigilant about data privacy and security, fact-checking AI-generated content, and moderating content to remove biases or stereotypes.

5. Environmental and sustainability goalsIt is essential to be aware that pre-trained foundation models can consume substantial energy during adaptation and fine-tuning despite being pre-trained. If developers are considering a pre-training model or creating one from scratch, this is something to remember. The energy consumption levels can vary significantly depending on how foundation models are obtained, whether through purchasing, boosting, or developing. Neglecting to address this issue could negatively impact an organization's carbon footprint, particularly as Gen AI applications are scaled up across the enterprise. Therefore, it is crucial to consider potential environmental impacts beforehand and explore available options.

6. Platform approachBusinesses can leverage Gen AI models by deploying them on their own public or private cloud, giving them complete control over the models, or by accessing Gen AI through a managed cloud service from an external vendor for faster and simpler implementation. However, complete control requires identifying and managing the necessary infrastructure, version controlling the models, and developing the required talent and skills. Although dedicated infrastructure provides better cost predictability, it requires additional effort and complexity to achieve enterprise-scale performance.

7. LLMOPSConsider the potential impact of Generation Artificial Intelligence (Gen AI) on your organization's operability. Many companies have developed Machine Learning Operations (MLOps) frameworks to standardize the production of ML applications. However, reviewing these frameworks to incorporate Low-Level Machine Operations (LLMOps) and Gen AIOps considerations is essential.

This includes accommodating changes in DevOps, Continuous Integration/Continuous Deployment/Continuous Testing (CI/CD/CT), model management, model monitoring, prompt management, and data/knowledge management in pre-production and production environments. The MLOps approach must evolve for foundation models, considering processes across the entire application lifecycle. With AutoGPT, artificial intelligence automates the production, monitoring, and calibration of models and model interactions to deliver business service level agreements (SLAs).

Generative AI Architecture Focus Areas How to Select a Foundation Model?

How to Select a Foundation Model?A thorough analysis is necessary when selecting a foundation model and deciding between open- and closed-source options. Factors to consider

i. Project requirements and objectivesWhat specific tasks and goals does your project require the model to achieve?

ii. Cost implicationsWhat are the costs of each option, including initial expenses, maintenance, and future expenses?

iii. Data privacy and securityHow does each model handle sensitive data? Is it secure for projects involving confidential or personal information?

iv. Customization and controlDo you need advanced customization options that allow you to adjust and modify model parameters to a great extent?

v. Support and communityThe level of community support is vital. Does it align with the team's expertise and resources?

vi. Scalability and performanceWhat is the model's ability to handle growing volumes of data and increasing task complexity, both currently and in the future?

vii. Legal and ethical considerationsWhat ethical and legal implications, including potential biases in the model and restrictions on data usage and commercial applications, need to be considered?

viii. Availability of skills and resourcesIs your team equipped to implement and maintain an open-source model? Or would a closed-source, ready-made solution be more suitable?

ix. Long-term viabilityHow sustainable is the model for ongoing support and development? This ensures its long-term usefulness.

x. Integration with existing systemsHow well does the model integrate with your current infrastructure and workflows, particularly in complex or established operational environments?